Avoid This Mistake with Bulk Insert in Node.js and MySQL

Last week, I ran into a serious performance issue while working on a bulk insert feature using Node.js and MySQL. The application’s memory usage kept increasing until it eventually crashed. After hours of debugging, I realized the root cause: I was using execute() for bulk inserts with a batch size of 5000. This mistake led to memory leaks that overwhelmed the system. In this post, I’ll break down the difference between query() and execute(), share what went wrong, and offer practical solutions to help you avoid this issue.

Understanding query() vs execute() in MySQL + Node.js

What is query()?

The query() method in mysql2 or mysql libraries constructs the full SQL string by interpolating parameters before sending it to the MySQL server. It doesn’t use prepared statements or any server-side caching.

- Pros: Simple to use, efficient for one-time operations.

- Cons: Vulnerable to SQL injection if not properly escaped. Less efficient for repetitive queries.

What is execute()?

The execute() method uses prepared statements. It sends the SQL statement and parameters separately to the server. The statement is cached on the server, and on the client (via LRU cache), making it efficient for repeated execution.

- Pros: Safe against SQL injection, high performance for repeated identical queries.

- Cons: Prepared statement cache can grow uncontrollably if not reused properly, leading to out-of-memory errors.

Quick Comparison: query() vs execute()

| Feature / Usage Context | query() | execute() |

|---|---|---|

| SQL Injection Protection | ❌ Requires manual sanitization | ✅ Automatic parameter binding |

| Performance for Repeated Queries | ❌ New query parsed each time | ✅ Faster with reuse (Prepared Statement) |

| Best for Bulk Inserts | ✅ Yes, allows large multi-value insert in one SQL string | ❌ No, creates new prepared statement each time |

| Memory Management | ✅ No caching overhead | ❌ Can cause memory leaks if not manually unprepared |

| Ease of Use | ✅ Simple to implement | ⚠️ Needs cache awareness / unprepare |

| Prepared Statement Reuse | ❌ Not available | ✅ Reuses prepared SQL with different params |

| Used When… | Inserting big batch once, quick queries | Running the same query repeatedly with different inputs |

The Memory Leak I Faced Using execute()

My implementation looked simple at first: I used mysql2‘s execute() method to insert a batch of 5000 records repeatedly. Each call used a different parameter set. But behind the scenes, each execute() created a new prepared statement stored in memory.

As the app continued running, the memory usage increased with every new batch insert. Eventually, my server crashed due to out-of-memory (OOM). I learned that the client-side cache (in mysql2) and the MySQL server’s prepared statement cache were filling up and not being cleared, since I wasn’t reusing statements or explicitly releasing them.

Why execute() Is Not Ideal for Bulk Inserts

Prepared statements are designed for repeated use with the same SQL query. But in my case, the SQL statement stayed the same, while the parameters changed every time. Since I wasn’t reusing the statement, each call to execute() created a new entry in the cache. That’s fine for a few hundred statements—but not for tens of thousands.

Moreover, the mysql2 client keeps a Least Recently Used (LRU) cache. Once this cache gets full, it doesn’t clear prepared statements immediately. Instead, memory usage climbs, leading to instability and eventual crashes.

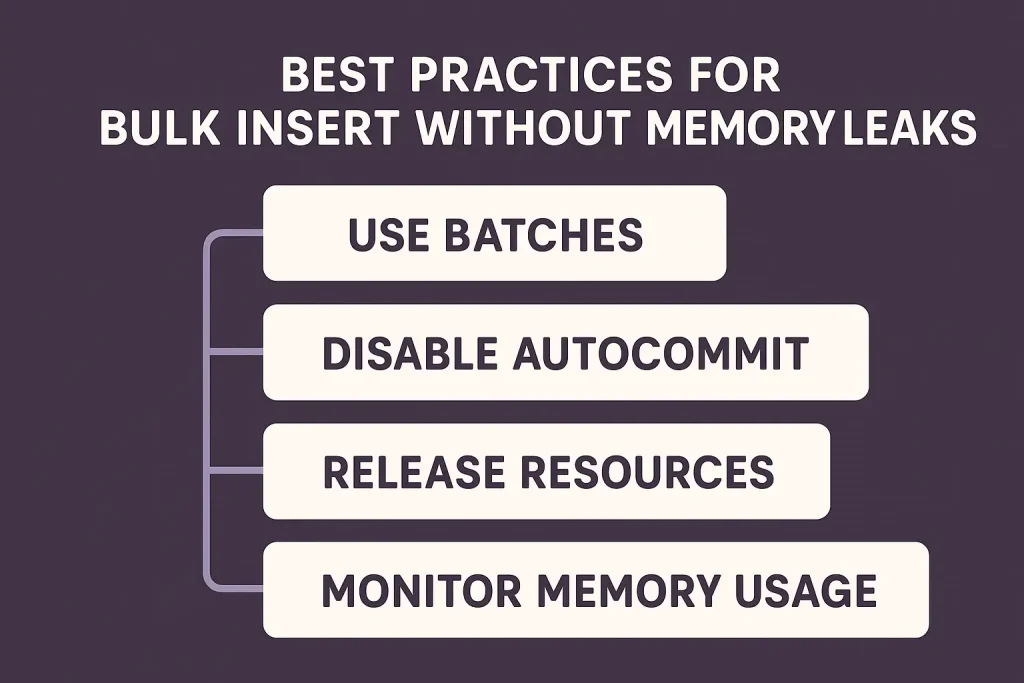

Best Practices for Bulk Insert Without Memory Leaks

✅ 1. Use query() for Bulk Insert

Construct a full multi-value SQL insert and use query() to execute it.

const values = records.map(r => `(${r.id}, '${r.name}')`).join(',');

const sql = `INSERT INTO users (id, name) VALUES ${values}`;

await connection.query(sql);

- This method avoids prepared statement overhead.

- Suitable for inserting large batches.

✅ 2. Use prepare() and unprepare() explicitly (if you must use prepared statements)

const stmt = await connection.prepare("INSERT INTO users (id, name) VALUES (?, ?)");

for (let record of records) {

await stmt.execute([record.id, record.name]);

}

await stmt.unprepare();

- Manually control prepared statement lifecycle.

- Prevents buildup of unused prepared statements.

✅ 3. Reduce Your Batch Size

Smaller batches (e.g., 1000–2000 rows) are less memory-intensive. While it may increase insert time slightly, it helps avoid memory spikes.

When You Should Still Use execute()

While execute() isn’t ideal for bulk inserts, it’s perfect for:

- Single-row inserts

- Repeatedly running the exact same SQL query with different parameters

- Read-heavy applications with frequent filtering

Example:

const sql = "SELECT * FROM users WHERE email = ?";

await connection.execute(sql, [email]);

Bonus Tips to Optimize Inserts

- Use connection pooling to reuse DB connections efficiently.

- Monitor prepared statements using:

SHOW GLOBAL STATUS LIKE 'Com_prepare_%'; - Consider MySQL bulk insert limits (packet size, max allowed rows).

- Use transactions if batching many inserts for atomicity.

Conclusion

Bulk inserts in Node.js and MySQL are deceptively tricky. I learned the hard way that execute()—while powerful—isn’t suitable for large dynamic inserts due to memory caching. If you’re inserting thousands of records, use query() with a single SQL statement or carefully manage your prepared statements. By applying these best practices, you can avoid memory leaks, speed up your inserts, and build a more stable application.

FAQs

❓ Can I still use execute() for bulk inserts?

Only if you manually manage prepared statements and ensure reuse. Otherwise, prefer query().

❓ What batch size is ideal for bulk insert?

Typically 1000–2000 rows, depending on your server’s memory and MySQL settings.

❓ How do I track prepared statement usage?

Use the SQL command:

SHOW GLOBAL STATUS LIKE 'Com_prepare_%';