Understanding the Limits of LIMIT and OFFSET for Large Datasets

Introduction

When dealing with large datasets (e.g., over 100 million records), using LIMIT and OFFSET for pagination can lead to significant performance problems. This post will explain how the algorithm works, why it can be inefficient for large datasets, and explore alternative strategies for better performance.

1. How the LIMIT and OFFSET Algorithm Works

LIMIT and OFFSET are often used for paginating database queries by returning a subset of records.

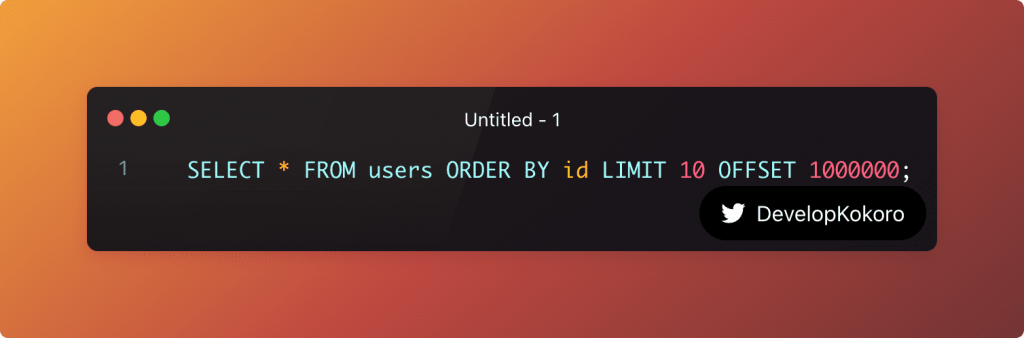

LIMIT: Specifies the maximum number of rows to return.OFFSET: Specifies the number of rows to skip before starting to return rows. Query Example:

- This query will return 10 rows starting from the 1,000,001st row in the table.

Behind the scenes:

- The database still scans all rows up to the

OFFSETvalue, meaning it must process (but not return) all 1,000,000 rows in this case before it can deliver the next 10.

2. Performance Impact on Large Datasets

- Linear Growth of Query Time: As the

OFFSETvalue grows, the query time increases linearly because the database must scan more rows.- For example, querying with an offset of 10,000,000 will be significantly slower than querying with an offset of 1,000.

- Inefficient Use of Indexes: With large offsets, the database often cannot use indexes effectively, and it must perform more work to skip over rows.

- Memory and Disk Usage: The more rows the database has to skip, the more memory it consumes to store intermediate results, leading to potential disk spills and slower query times.

3. Real-World Example: Slow Queries with High OFFSET Values

Imagine you have a table with 100M rows and you are trying to fetch the next page of data:

SELECT * FROM orders ORDER BY created_at LIMIT 20 OFFSET 50000000;Even though you’re asking for just 20 rows, the database will scan 50 million rows before it starts returning results.

4. Why is This a Problem?

- High Computational Cost: Skipping rows means the database is performing unnecessary work. It scans rows, applies sorting, and then discards them. The more rows it skips, the worse the performance.

- Dynamic Data: If records are being inserted or deleted frequently, the results can become inconsistent. A row might move from one page to another in between queries, leading to missing or duplicated data.

5. Alternative Strategies for Pagination

A. Keyset Pagination (Cursor-based Pagination)

IKeyset pagination is a common alternative to LIMIT and OFFSET when working with large datasets. Instead of skipping rows, it uses a reference column (usually an indexed field like id or created_at) to fetch the next set of results.

Example:

SELECT * FROM users

WHERE id > last_seen_id

ORDER BY id ASC

LIMIT 10;Advantages:

- Efficient for Large Datasets: It avoids scanning and discarding rows as

OFFSETdoes. It fetches only the required records directly. - Consistent Performance: Keyset pagination does not slow down with increasing dataset size because it relies on indexed fields for direct lookups.

- No Skipped Rows: Since the query doesn’t “skip” rows, the database doesn’t do unnecessary work, making it very efficient.

Disadvantages:

- Handling Record Deletions:

- If records are removed between page loads, keyset pagination can miss or skip rows.

- Example: If a row with

id=1005is deleted and you paginate based onid, the results after that might be off becauseid > 1005might now return an incorrect set of rows. - Solution: To mitigate this, you can use more stable columns like

created_ator combine multiple columns (e.g.,idandcreated_at) to ensure ordering stays consistent even if some records are deleted.

- Limited Flexibility with Sorting:

- Keyset pagination is tightly coupled to the sorting order (usually

idorcreated_at). This makes it less flexible if you need to sort results by other columns, such as a complex calculated field. - For instance, paginating based on a column like

pricewould be more challenging, as records with the same price could create ambiguities in the pagination.

- Keyset pagination is tightly coupled to the sorting order (usually

- No Random Access to Pages:

- Unlike

LIMITandOFFSET, where you can access any page at any time, keyset pagination works in a sequential manner. You cannot “jump” to page 10 directly without retrieving the data from all the previous pages. This can be limiting for user interfaces that require random page access.

- Unlike

- Complex Queries for Large Tables:

- For very large tables, the keyset pagination query might become more complex if you need to paginate based on multiple fields. For instance, when dealing with multi-column ordering (e.g.,

created_at,id, and a status field), the query and indexing strategy must be carefully crafted to maintain performance.

- For very large tables, the keyset pagination query might become more complex if you need to paginate based on multiple fields. For instance, when dealing with multi-column ordering (e.g.,

When to Use Keyset Pagination:

- Keyset pagination works best when:

- You are displaying data in sequential order.

- You don’t need to provide random access to pages.

- The underlying data is relatively stable, with few deletions or re-orderings.

When Not to Use It:

- It may not be ideal if:

- Your dataset involves frequent deletions or dynamic reordering.

- You need to allow users to jump to specific pages (e.g., “go to page 100”).

- You need to sort by multiple columns or less stable fields.

B. Using Window Functions

Some databases (e.g., PostgreSQL) support window functions, which can be used to create row numbers dynamically for efficient paging. (how to get next and previous row)

Example:

SELECT * FROM (

SELECT *, ROW_NUMBER() OVER (ORDER BY id) as rownum

FROM users

) AS numbered_users

WHERE rownum BETWEEN 1000001 AND 1000010;- This approach can be more efficient than using a plain

LIMITandOFFSET.

Advantages:

- Accurate Pagination:

- Window functions allow for exact page boundaries, making it easy to fetch specific rows without relying on potentially unstable

idvalues.

- Window functions allow for exact page boundaries, making it easy to fetch specific rows without relying on potentially unstable

- No Large Offset Skips:

- The row numbering happens as part of the query execution, so you don’t need to skip large numbers of rows (as with

LIMITandOFFSET). The database computes row numbers dynamically, avoiding full table scans.

- The row numbering happens as part of the query execution, so you don’t need to skip large numbers of rows (as with

- More Flexibility in Sorting:

- Unlike keyset pagination, window functions let you sort by multiple columns without significant performance impact. For instance, you can paginate based on

created_at,price, or any combination of fields, making it more flexible for complex sorting needs.

- Unlike keyset pagination, window functions let you sort by multiple columns without significant performance impact. For instance, you can paginate based on

Disadvantages:

- Performance Issues with Large Datasets:

- For very large tables, window functions can be slow, as the database needs to compute the row numbers for all rows in the result set before filtering. This can lead to high CPU and memory usage, especially if you’re dealing with millions of records.

- Even if you’re fetching a small set of records, the database still has to process the entire result set to assign row numbers.

- Handling Deleted or Inserted Rows:

- If records are deleted or inserted between pages, the row numbers will change, causing inconsistent pagination results. For example, the second query may return overlapping or missing rows compared to the first query if the underlying data changes.

- Solution: One way to mitigate this is to cache the results temporarily or lock the dataset, but this is not always practical, especially for dynamic data.

- Limited Database Support:

- Not all databases support window functions, so your ability to use them may be restricted depending on the database you’re working with. While they are available in modern systems like PostgreSQL and SQL Server, they might not be available in older or simpler databases like MySQL (before version 8.0).

- Complex Queries Can Be Hard to Optimize:

- As the complexity of your query increases (e.g., using window functions in combination with other subqueries or joins), performance tuning becomes more difficult. Optimizing such queries requires a deeper understanding of the database execution plan and indexing strategies.

- Queries using window functions might not leverage indexes as efficiently as simpler queries, which can lead to performance bottlenecks

C. Partitioning the Dataset

- Partitioning breaks a large table into smaller, more manageable pieces (e.g., by date or some other range).

- Queries can then focus on the relevant partition, reducing the overall query time.

- This works well for very large, high-traffic datasets.

- But it has some limitations

6. Choosing the Right Strategy

The right strategy depends on your dataset and use case. For large, growing datasets with millions of records, avoid LIMIT and OFFSET in favor of keyset pagination or partitioning. Use window functions if your database supports them and precomputed results if you need high-speed responses for frequently accessed pages.

Conclusion

Pagination is crucial when working with large datasets, but using LIMIT and OFFSET for large datasets can lead to severe performance issues. Understanding the algorithm’s limitations and implementing better strategies such as keyset pagination, window functions, or partitioning can greatly improve your query performance.

ref:

https://dev.mysql.com/doc/refman/8.4/en/limit-optimization.html